Web Crawler Directory & Bot Database

Identify, check, manage unwanted web crawlers and bots.

#Analytics

#SEO

#Developer Tools

Web Crawler Directory & Bot Database – Identify and manage web crawlers and bots

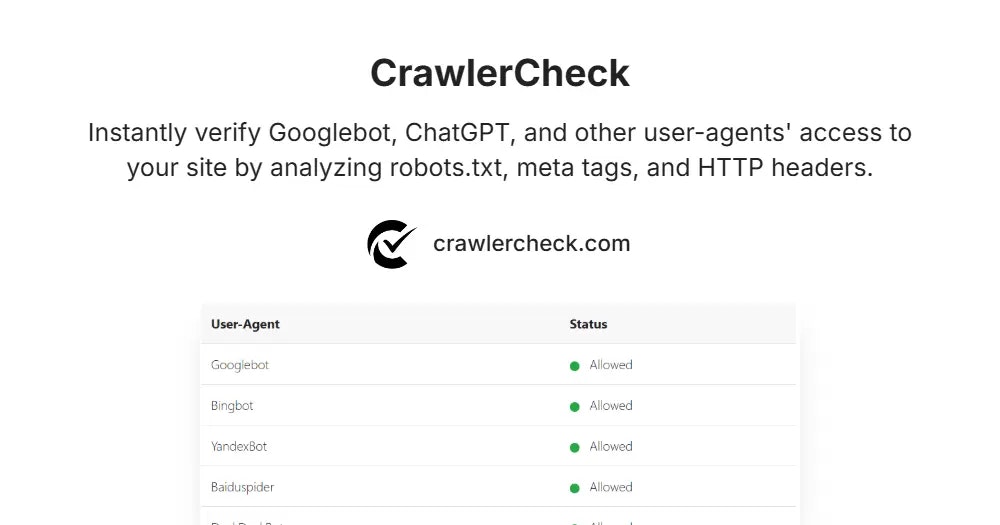

Summary: This tool provides a searchable database of over 150 active web crawlers, including Googlebot and GPTBot, to identify AI bots, scrapers, and search engines scanning websites. It offers safety classifications and robots.txt rules for managing unwanted bots.

What it does

It lists web crawlers with safety verdicts and provides exact robots.txt syntax to block specific bots. Users can run live tests to check if a bot is allowed or blocked on their site.

Who it's for

Web developers, SEO professionals, and site owners seeking to identify and control web crawlers and bots in server logs.

Why it matters

It simplifies identifying unknown bots and managing their access, reducing unwanted or aggressive crawling on websites.