Inference Engine by GMI Cloud

Fast multimodal-native inference at scale

#Developer Tools

#Artificial Intelligence

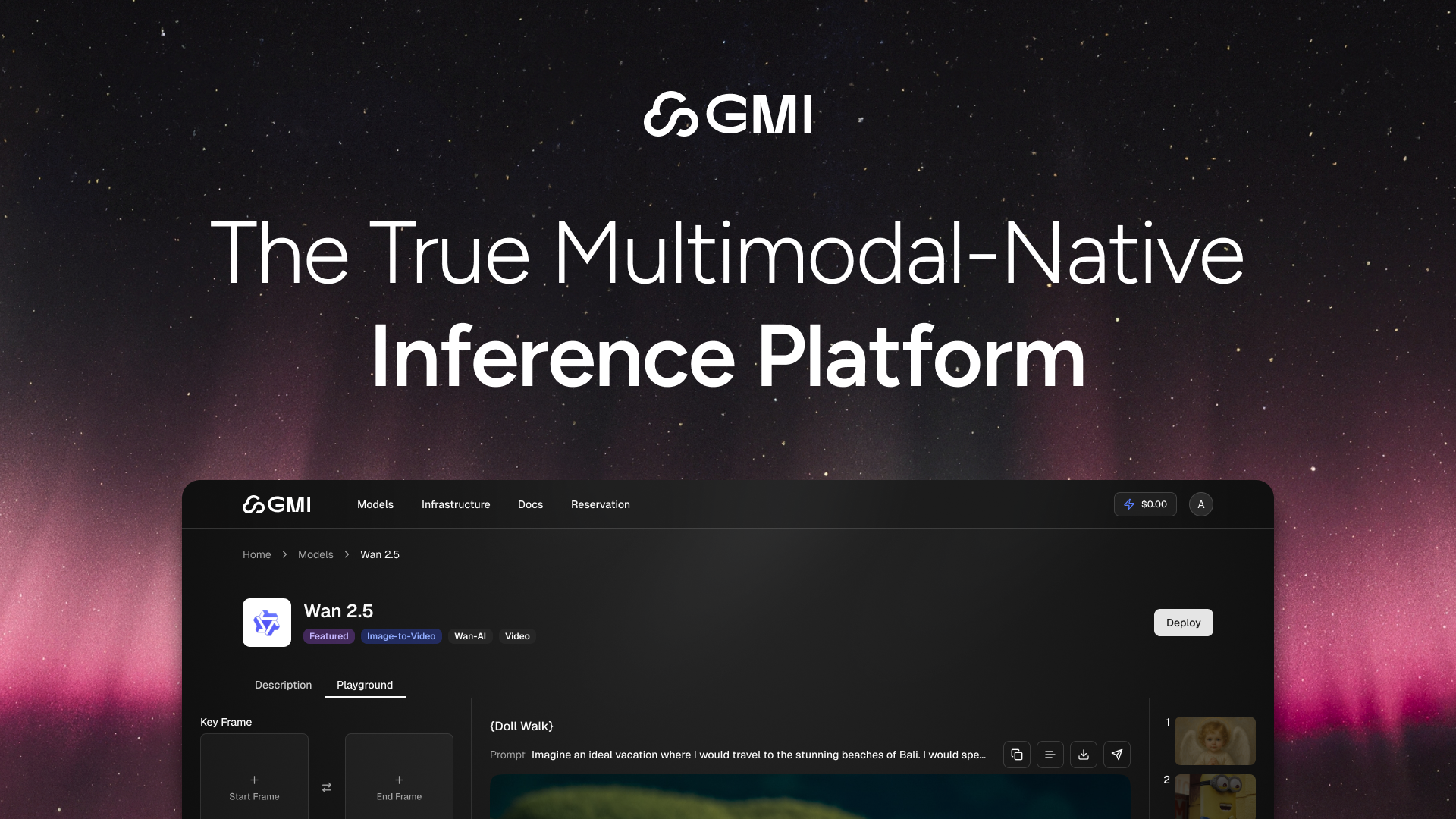

Inference Engine by GMI Cloud – Fast multimodal-native inference at scale

Summary: GMI Inference Engine is a unified platform for running text, image, video, and audio inference with enterprise-grade scaling, observability, and model versioning, delivering 5–6× faster performance for real-time multimodal applications.

What it does

It processes multiple data types in a single pipeline, enabling faster and scalable inference with tools for monitoring and managing model versions.

Who it's for

Developers and enterprises building real-time multimodal applications requiring scalable and efficient inference.

Why it matters

It accelerates multimodal inference workflows, ensuring real-time performance and operational control at scale.