Oprel

Local AI That Actually Uses Your Hardware Properly

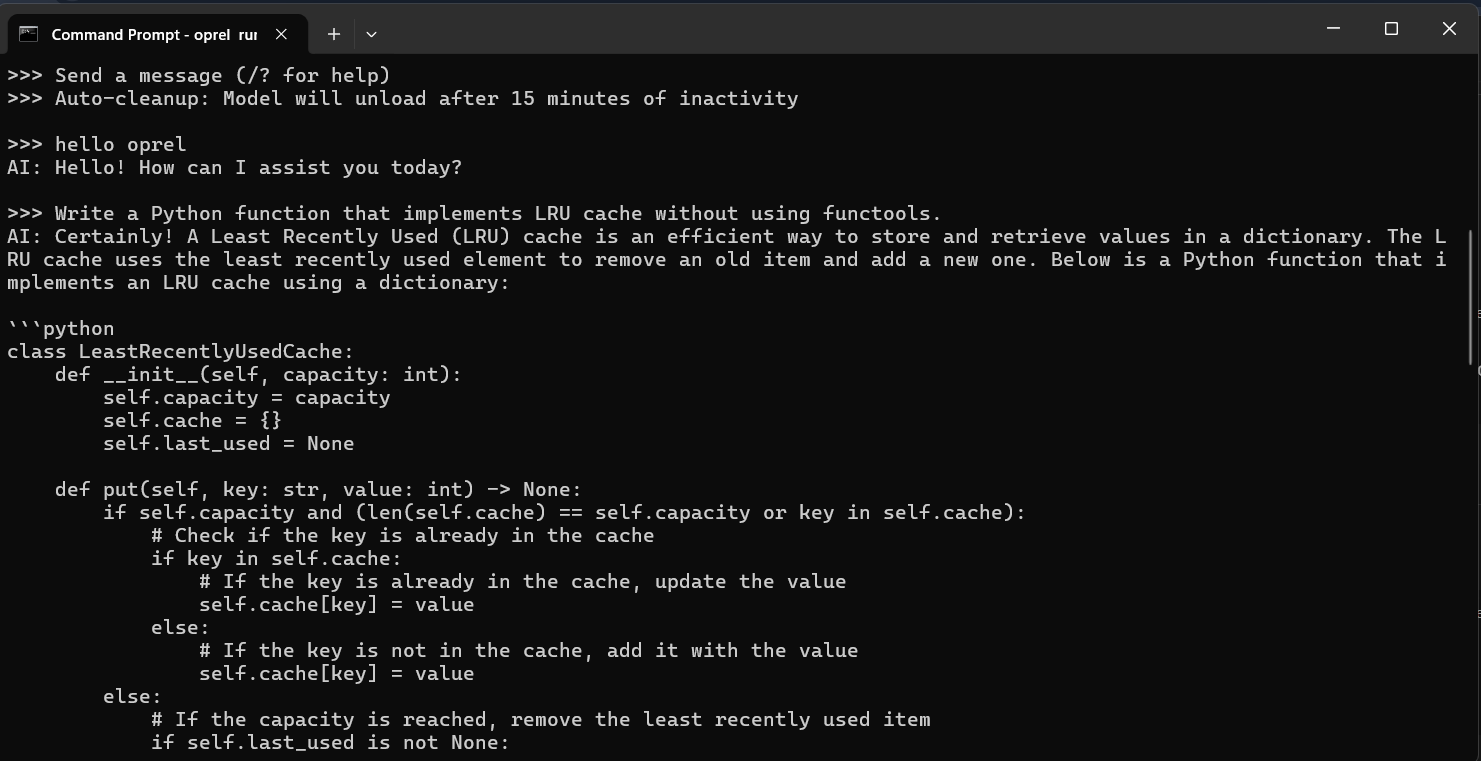

Oprel – Local AI runtime optimized for your hardware

Summary: Oprel is a Python library for running large language models locally with advanced memory management and hardware-aware optimization. It supports hybrid GPU/CPU offloading, automatic quantization, and batching to maximize performance across a range of devices from CPUs to high-end GPUs.

What it does

Oprel detects available hardware resources and selects the optimal backend, applying hybrid offloading and quantization to run large models efficiently. It falls back to CPU if CUDA fails and improves throughput with batching on high-end GPUs.

Who it's for

Developers running local AI models on diverse hardware setups, including CPU-only machines, mid-range GPUs, and high-end GPUs requiring efficient resource use.

Why it matters

It solves the issue of local AI tools either requiring high-end GPUs or underutilizing hardware by automatically adapting to available resources and managing memory intelligently.