Concilium

Stop comparing AI outputs. Let them review each other.

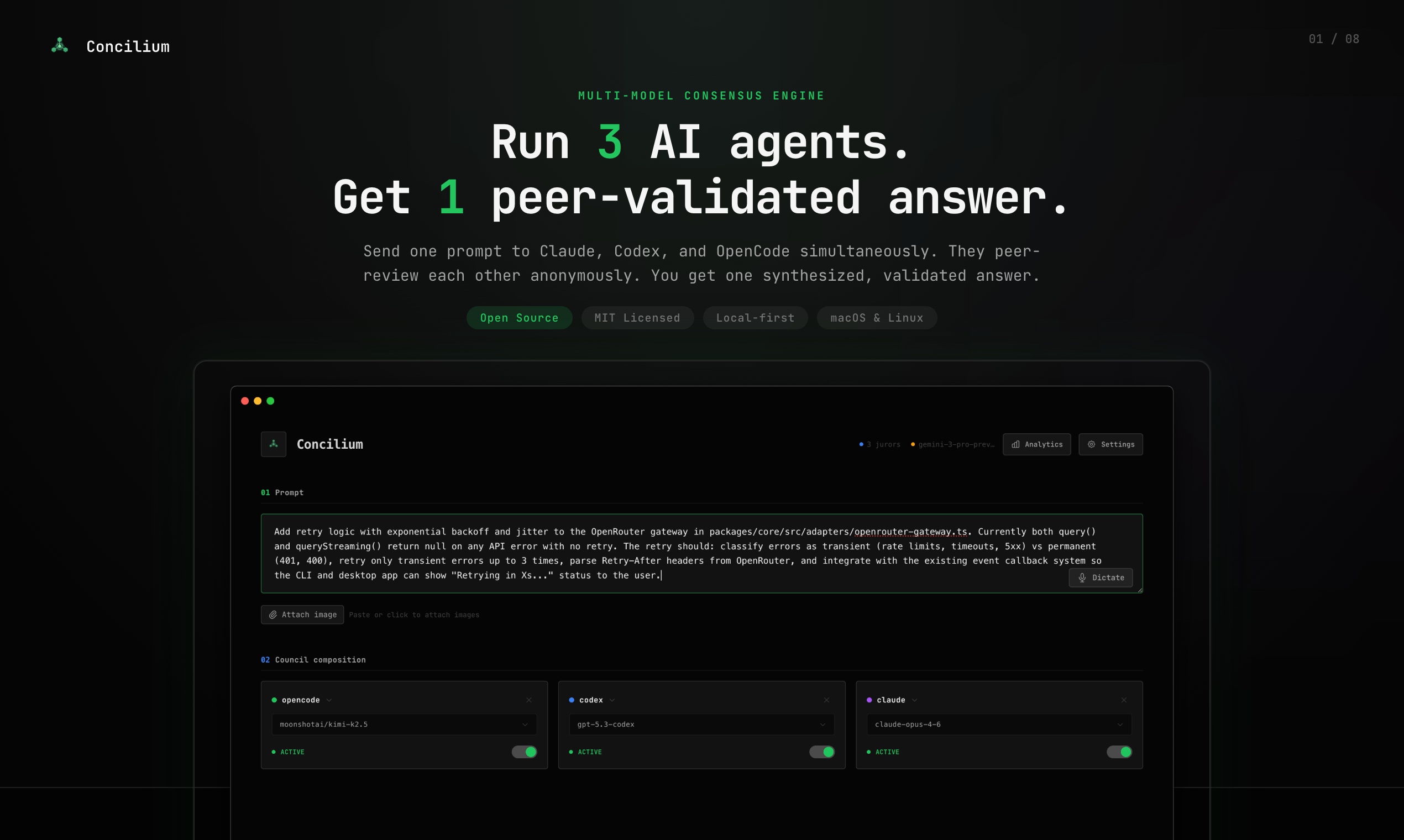

Concilium – AI models review each other's coding outputs

Summary: Concilium runs Claude, OpenAI Codex, and OpenCode simultaneously on coding prompts, then uses juror AI models to anonymously review and synthesize their responses into a single validated answer. It operates locally with CLI and desktop app options, ensuring code privacy and open-source transparency.

What it does

Concilium sends one prompt to multiple AI coding models in parallel, collects their outputs, and has juror models evaluate each response anonymously. A synthesis step combines the best parts into one final answer.

Who it's for

Developers seeking a streamlined way to compare and validate AI-generated code from multiple models without manual cross-checking.

Why it matters

It eliminates the need to manually review conflicting AI outputs by automating peer review and synthesis, saving time and reducing uncertainty in coding tasks.