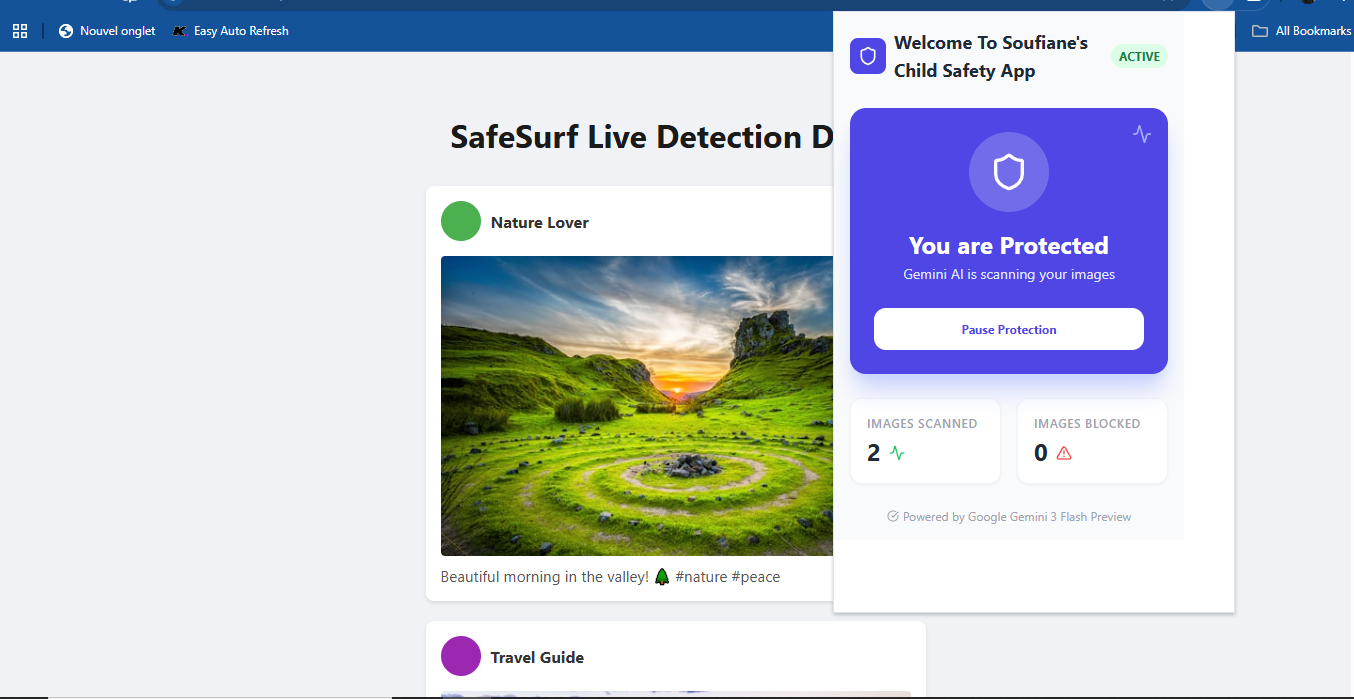

Nudity & Violence Detection Extension

Using AI to Detect Harmful Visual Content in Real Time

Nudity & Violence Detection Extension – AI-powered real-time harmful image detection

Summary: This extension uses AI to detect nudity, sexual content, and violence in images during web browsing. It analyzes images in real time without storing or sharing user data, enabling safer browsing and content moderation.

What it does

The extension collects image URLs, preprocesses images, and sends them to the Gemini API for analysis. It classifies images as safe or sensitive based on the presence of nudity or violence using the Gemini vision model.

Who it's for

It is designed for users seeking automated detection of harmful visual content, including parents and content moderators.

Why it matters

It addresses exposure to explicit or violent images online by providing real-time identification of sensitive content for safer internet use.