Next.js Evals

Performance results of AI coding agents on Next.js

#Open Source

#Developer Tools

#Artificial Intelligence

#GitHub

Next.js Evals – Benchmarking AI coding agents on Next.js tasks

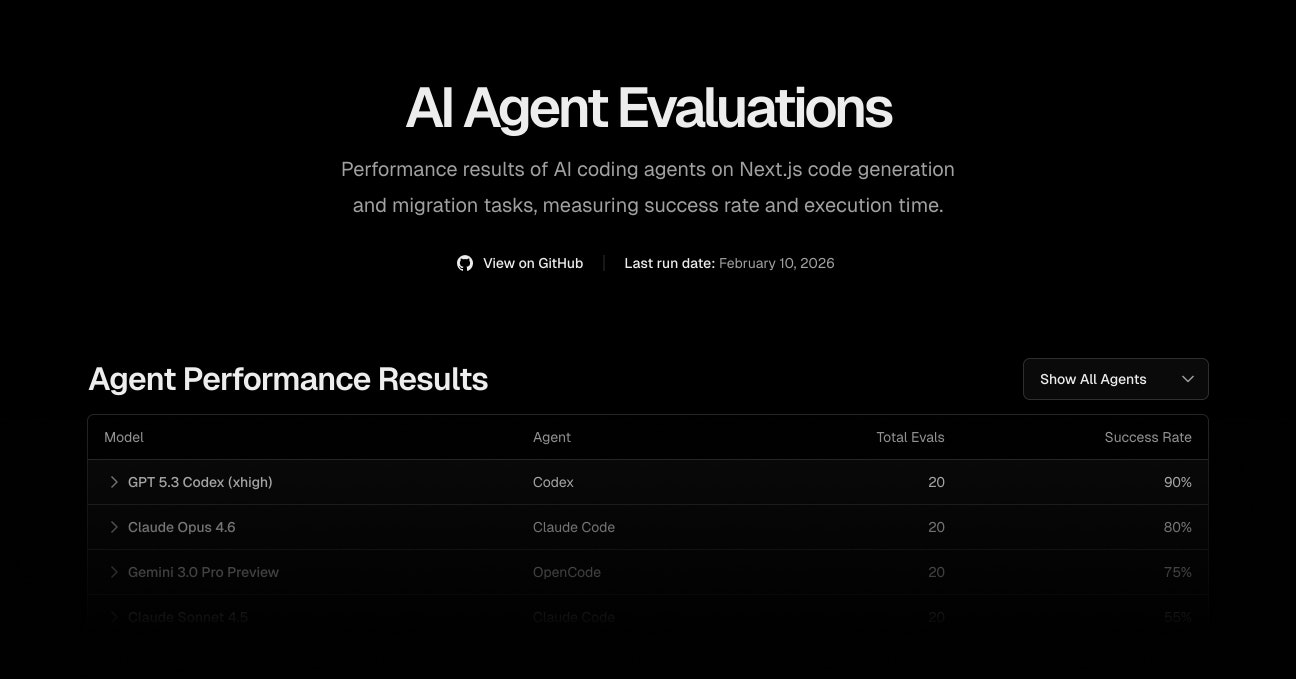

Summary: Next.js Evals measures AI coding agents' performance on Next.js code generation and migration by tracking success rates and execution times, providing updated daily benchmarks.

What it does

It evaluates AI models on Next.js tasks, reporting success rates and execution times to compare coding agent effectiveness.

Who it's for

Developers and researchers assessing AI coding models for Next.js code generation and migration.

Why it matters

It identifies the most effective AI coding agents for Next.js, aiding informed model selection.