VisualFlow

Make LLM prompts debuggable.

#Design Tools

#Developer Tools

#Artificial Intelligence

VisualFlow – Make LLM prompts debuggable

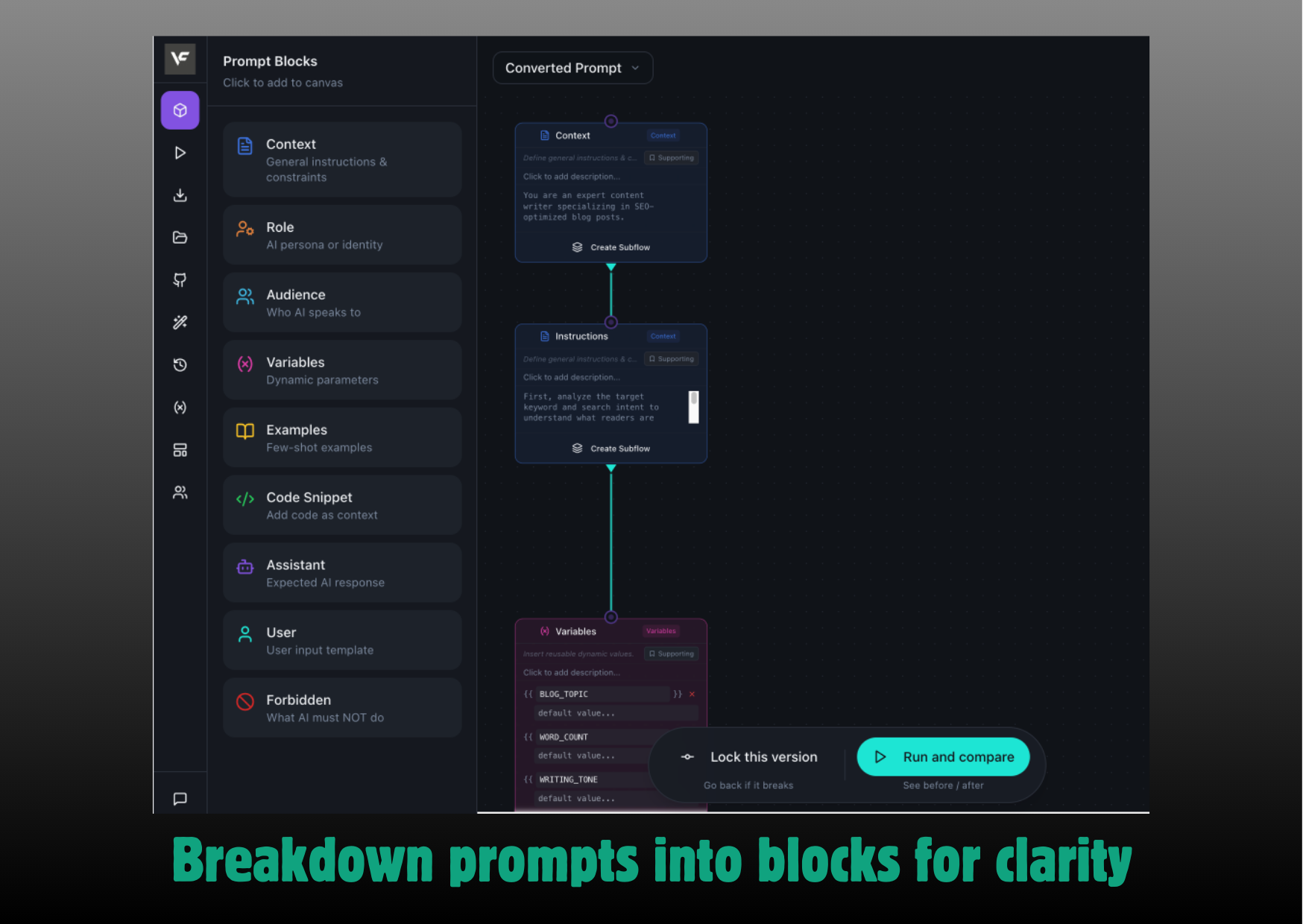

Summary: VisualFlow helps users identify which parts of a prompt affect LLM outputs by enabling controlled, step-by-step testing of changes and easy rollback to previous versions. It addresses the gradual fragility of prompts by making prompt iteration transparent and manageable.

What it does

VisualFlow shows which prompt segments impact the output, allows testing one change at a time, and lets users revert to earlier working prompts.

Who it's for

It is designed for users working with LLM prompts who need to debug and iterate prompt changes safely.

Why it matters

It solves the problem of unpredictable output shifts caused by incremental prompt changes by making those changes visible and reversible.