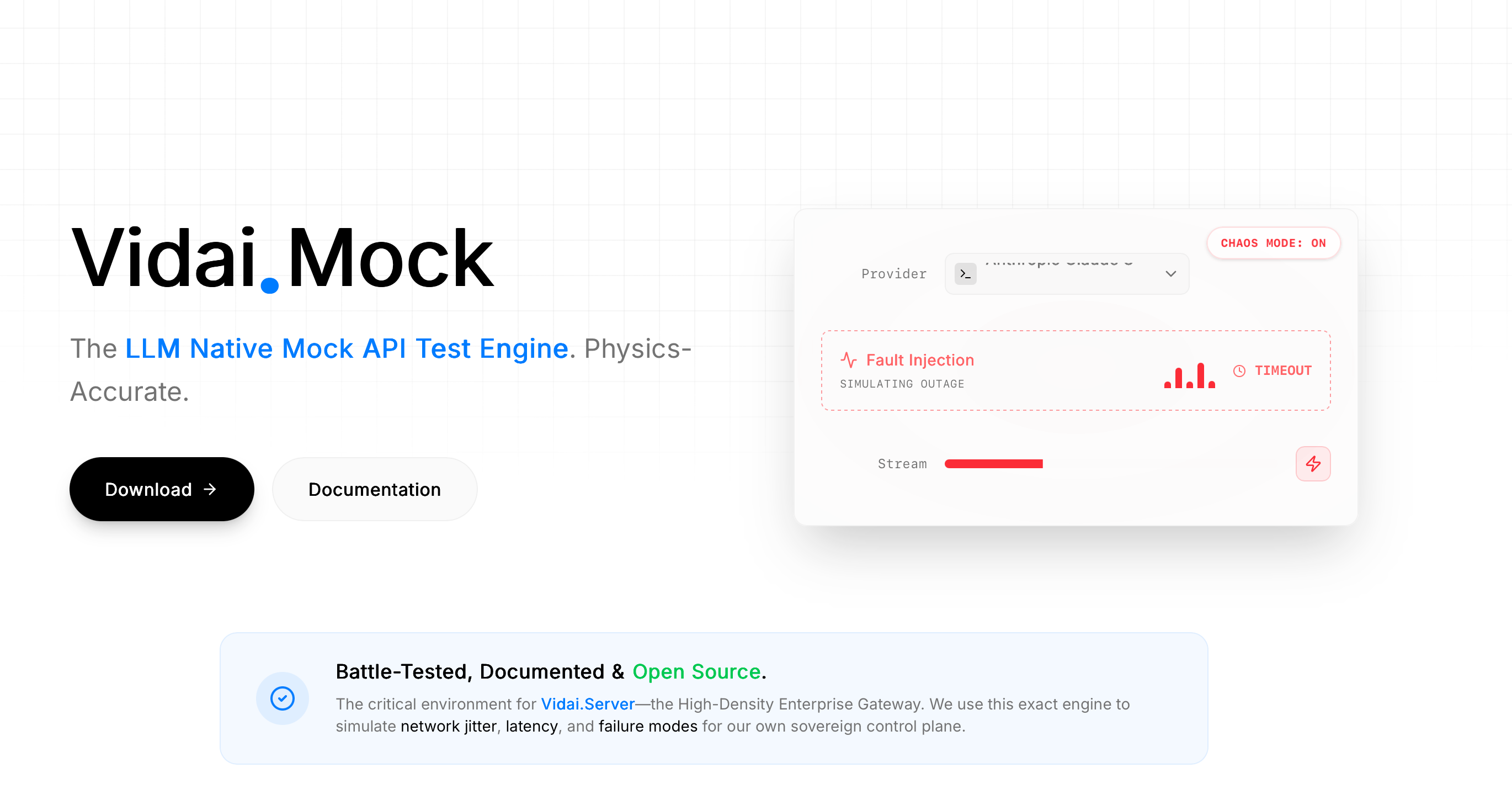

VidaiMock

Open Source LLM Native Mock API Test Engine

VidaiMock – Open Source LLM Native Mock API Test Engine

Summary: VidaiMock is a lightweight, Rust-based simulator that emulates OpenAI, Anthropic, Gemini, and other LLM APIs with zero configuration. It delivers physics-accurate streaming and token timing to test AI applications locally, reducing API costs and enabling chaos testing.

What it does

VidaiMock runs as a single ~7MB binary that mimics real-world LLM API behavior, including token-by-token streaming and wire protocol timing. It supports dynamic responses via Tera (Jinja2) templates and simulates network conditions like slow connections or crashes.

Who it's for

It is designed for developers building AI-powered applications who need to test LLM integrations without incurring API expenses or relying on external services.

Why it matters

VidaiMock eliminates the cost and latency of live API calls during development by providing a local, configurable environment that replicates real LLM interactions and failure scenarios.