TuneKit

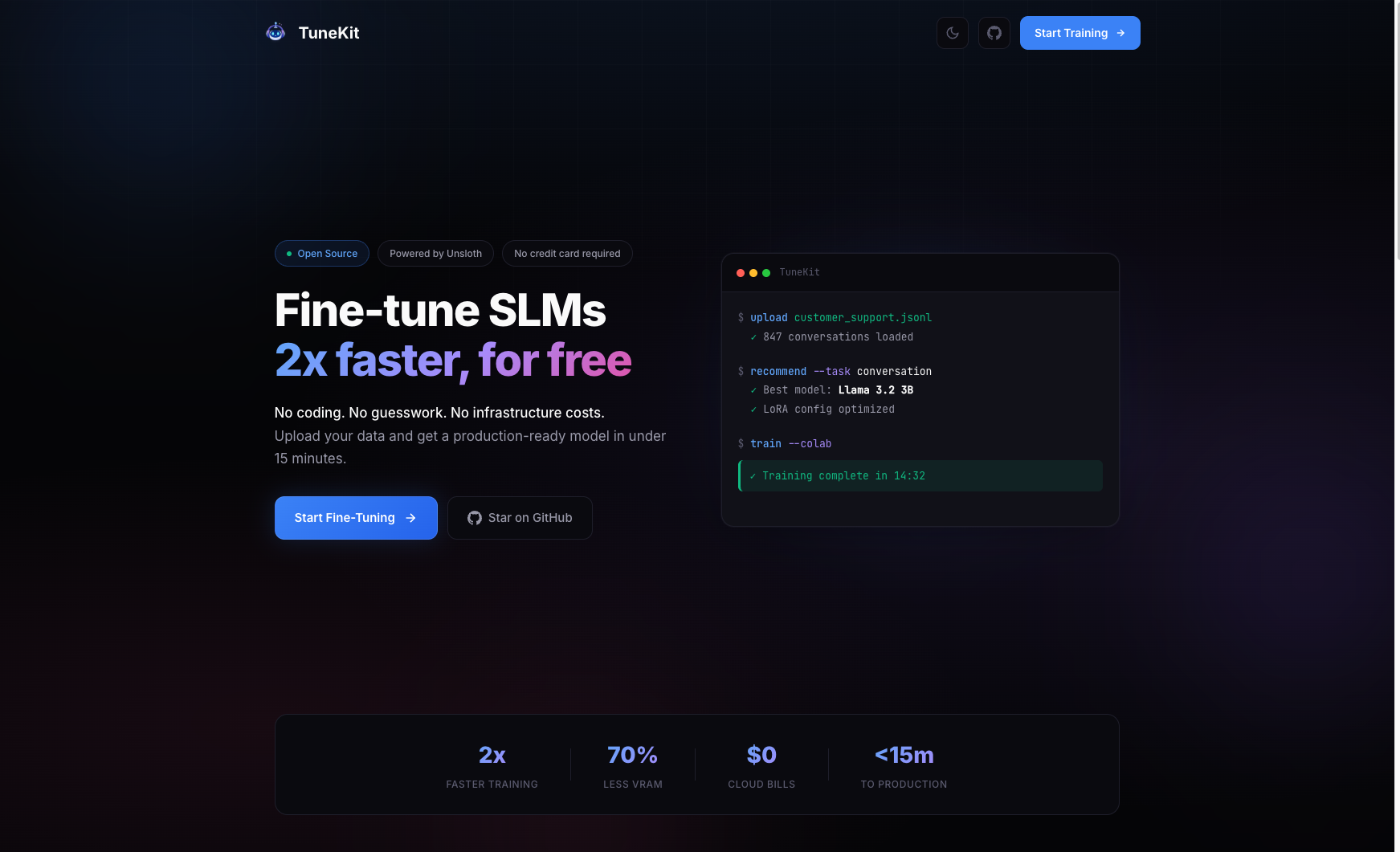

Fine-tune SLMs 2x faster, for free

TuneKit – Streamlined fine-tuning of SLMs with automated setup

Summary: TuneKit automates fine-tuning of large language models by analyzing uploaded JSONL data, recommending models, optimizing hyperparameters, and generating ready-to-run Colab notebooks. It supports multiple models and enables free training on Colab without GPU rental or scripting.

What it does

TuneKit processes user data to select the best model and hyperparameters, then creates a Colab notebook for training on free T4 GPUs. It supports exporting models in GGUF, HuggingFace, and LoRA formats.

Who it's for

Users needing efficient fine-tuning of SLMs without manual setup or coding, including those working with Llama 3.2, Phi-4, Mistral, Qwen, and Gemma models.

Why it matters

It eliminates complex setup and scripting, reducing fine-tuning time and cost by leveraging free Colab resources and automation.