ThriftyAI

Cut AI costs by 80% with intelligent semantic caching.

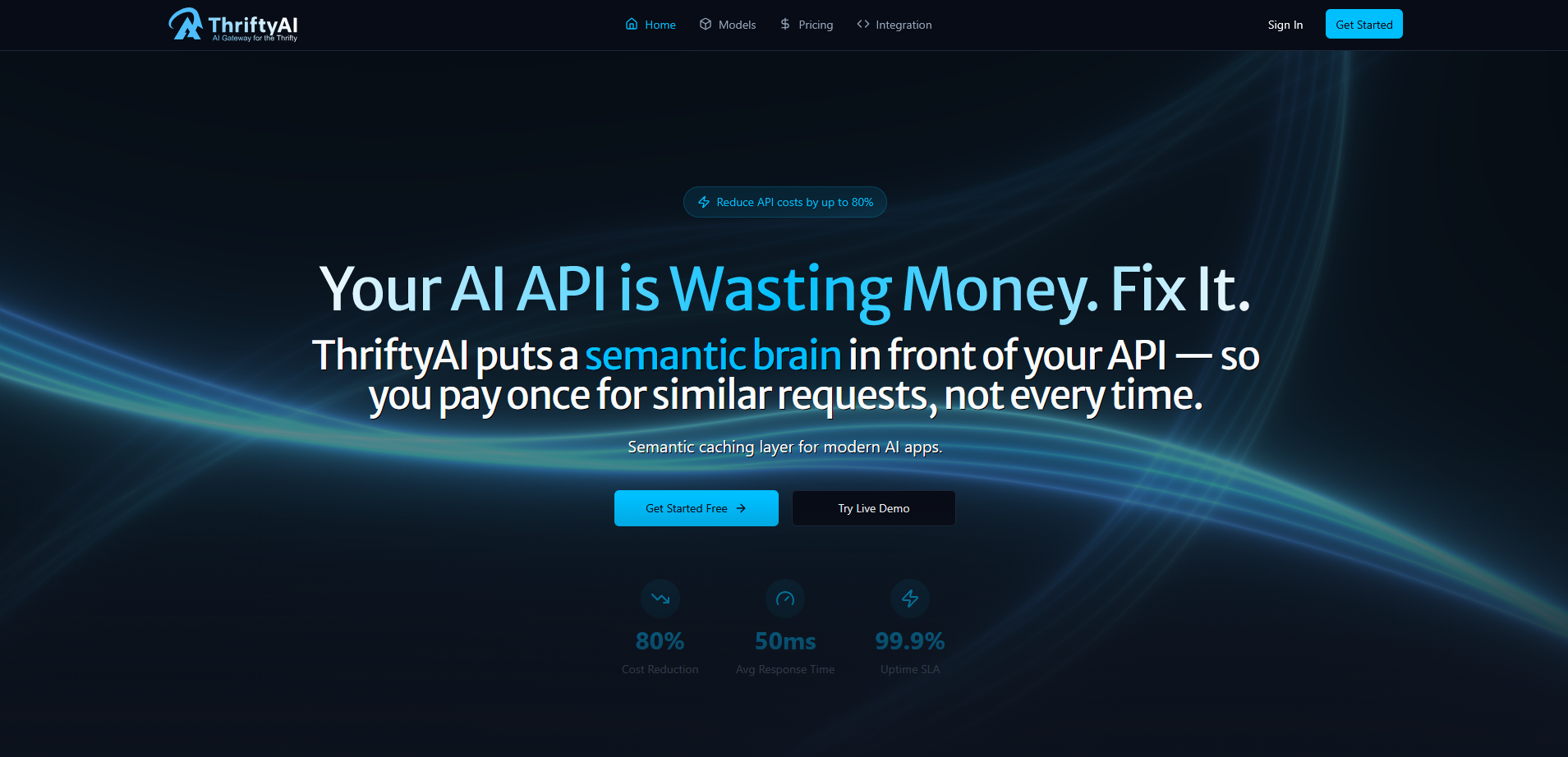

ThriftyAI – Intelligent semantic caching to reduce AI costs

Summary: ThriftyAI provides semantic caching for OpenAI, Anthropic, and Google AI, cutting costs by up to 80% and speeding response times by 10x. It caches based on intent rather than exact text, enabling repeated queries to return results in under 50ms. The platform includes PII masking, automatic provider fallback, and API key budget controls.

What it does

ThriftyAI intercepts requests between your app and AI providers, caching answers semantically to avoid repeated API calls. It masks personal data and switches providers automatically to ensure uptime.

Who it's for

It is designed for teams deploying AI in production who want to reduce API costs and improve response speed.

Why it matters

It prevents paying multiple times for similar AI queries, reducing expenses and latency while maintaining data privacy and reliability.