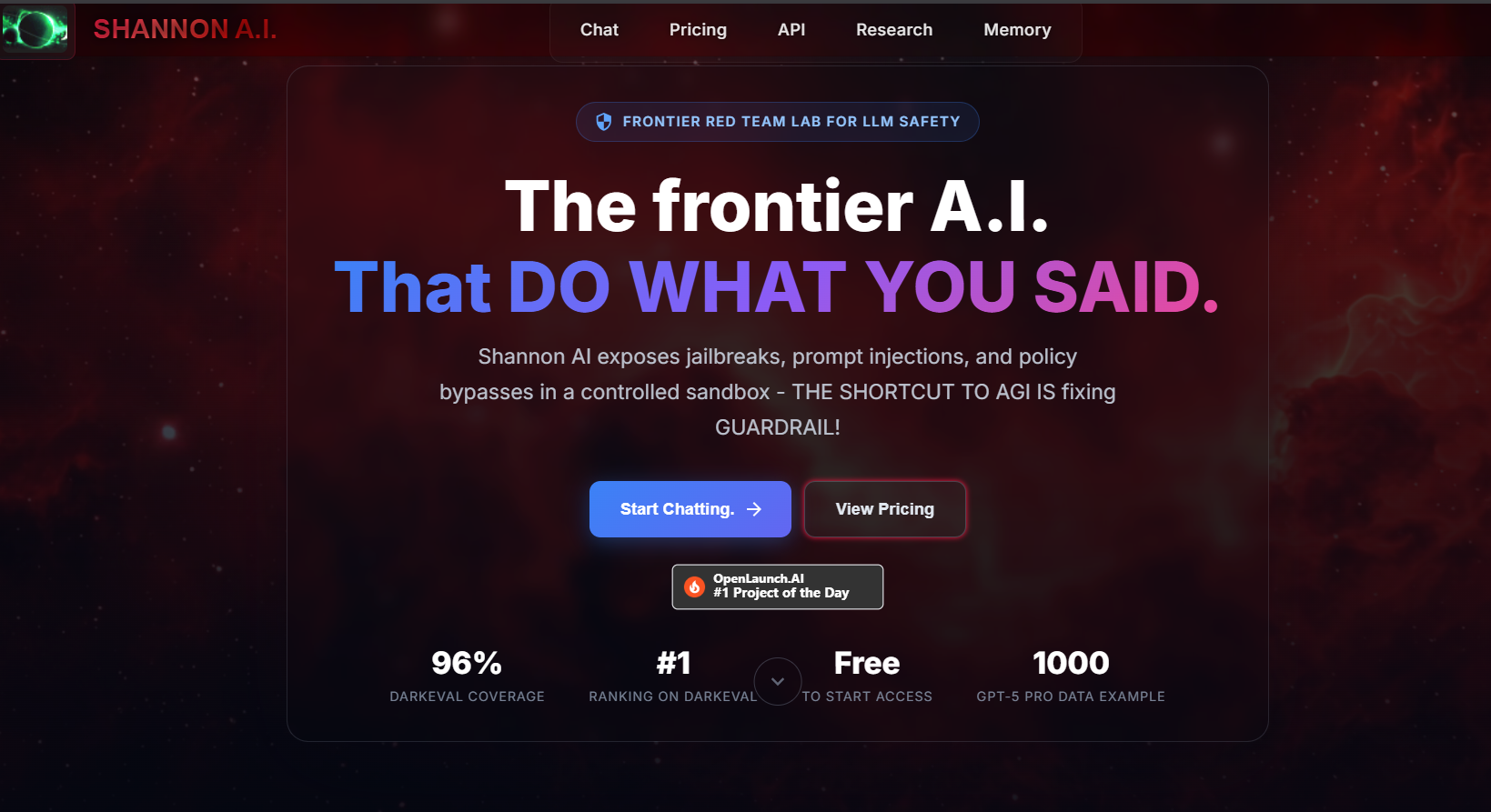

Shannon AI - Frontier Red Team Tool

Uncensored AI models for red team research.

Shannon AI - Frontier Red Team Tool – Uncensored AI models for adversarial research

Summary: Shannon AI provides uncensored AI models designed to expose failure modes by removing guardrails, enabling security researchers to identify vulnerabilities before malicious actors exploit them. It offers transparent reasoning and high exploit coverage for comprehensive red team testing.

What it does

Shannon AI delivers foundation and advanced models with relaxed constraints, trained on GPT-5 Pro outputs and fine-tuned via supervised and reinforcement learning. Its latest versions include transparent chain-of-thought reasoning to reveal multi-step exploit planning in real time.

Who it's for

It targets AI safety researchers, security teams conducting red team assessments, policy groups analyzing AI risks, and academics focused on alignment and adversarial testing.

Why it matters

By exposing AI behaviors without safety guardrails, Shannon AI enables testing of vulnerabilities that closed or safety-trained models conceal, supporting the development of safer AI systems.