RunStack

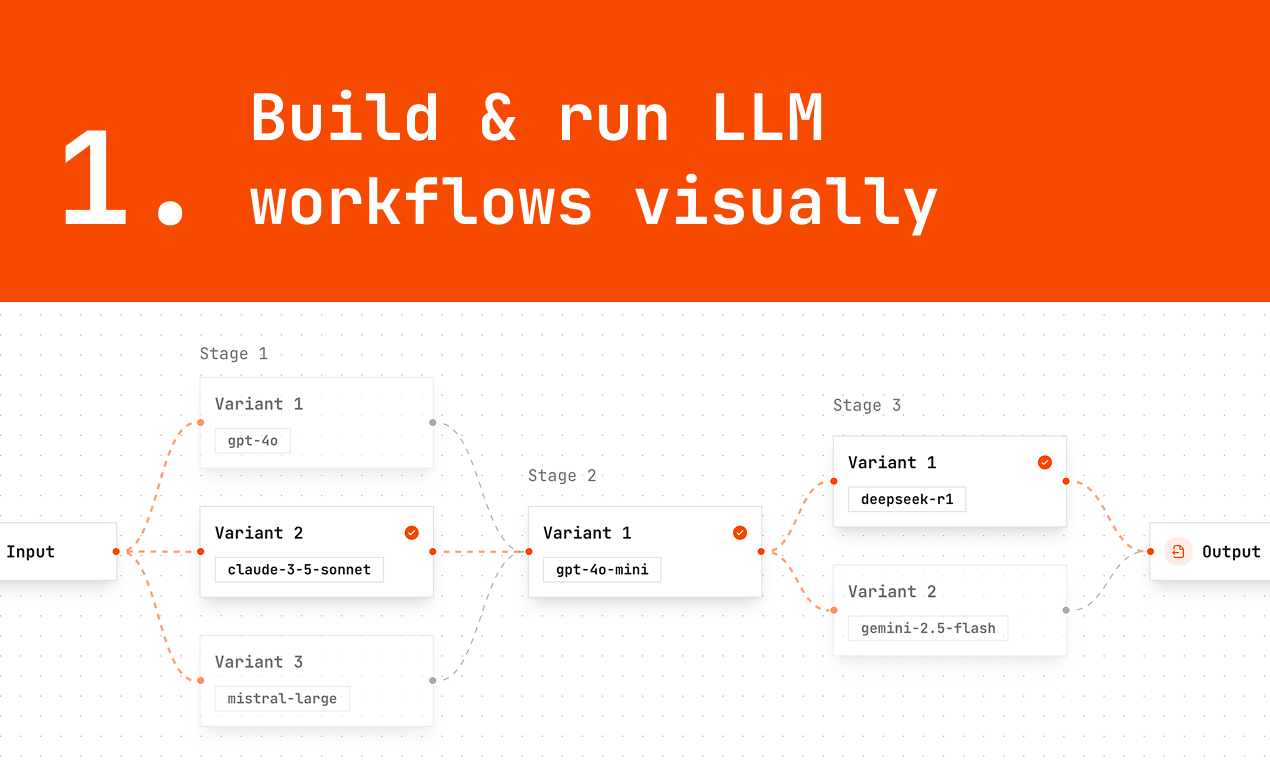

Build, test, and run LLM workflows — visually.

RunStack – Visual design and API deployment for LLM workflows

Summary: RunStack enables users to create, test, and run large language model (LLM) workflows using a visual editor, then deploy them as reusable API endpoints. It supports chaining prompts, running variants, comparing outputs and costs, and integrating workflows into applications.

What it does

RunStack provides a visual interface to design and test AI workflows, allowing users to compare prompt variants and select the best output. Workflows can be exposed as production-ready API endpoints callable from apps or backends.

Who it's for

It is designed for developers building AI agents, internal AI tools, or production LLM pipelines who need to bridge prompt experimentation and deployment.

Why it matters

RunStack addresses the complexity of managing, reusing, and integrating LLM workflows beyond initial prompt testing, streamlining production use.