ReliAPI

Stop losing money on failed OpenAI and Anthropic API calls.

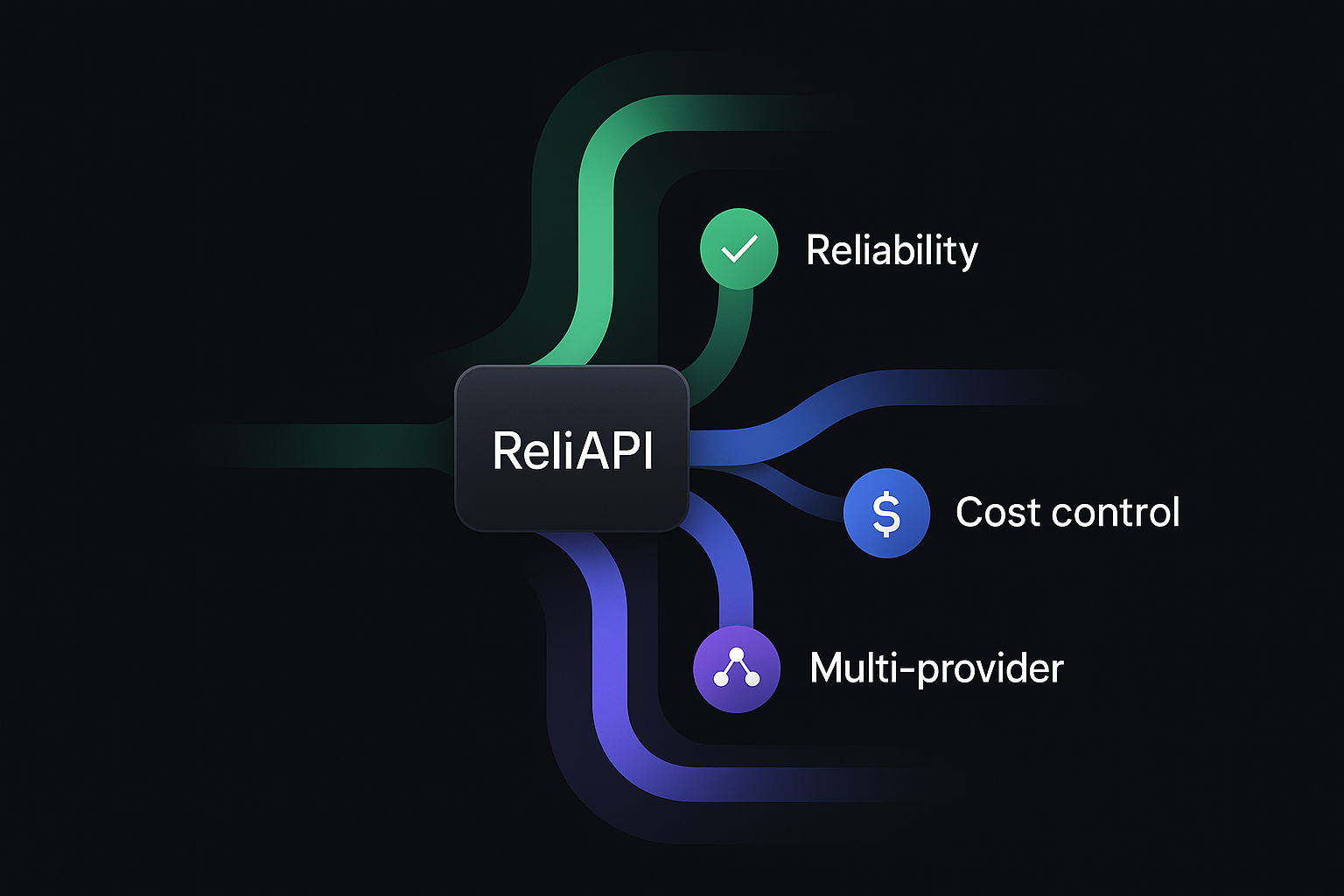

ReliAPI – Reliability and cost control for LLM and HTTP API calls

Summary: ReliAPI is a middleware designed specifically for LLM APIs like OpenAI, Anthropic, and Mistral, as well as generic HTTP APIs. It reduces costs and prevents duplicate charges by implementing smart caching, idempotency, budget caps, automatic retries, and real-time cost tracking, addressing common issues in LLM API usage.

What it does

ReliAPI acts as a reliability layer between applications and LLM or HTTP APIs, managing retries with exponential backoff, caching repeated requests to cut costs by 50-80%, enforcing idempotency to avoid duplicate charges, and applying budget limits to reject costly requests automatically.

Who it's for

It is intended for developers and teams using LLM APIs who need to control expenses, handle rate limits, and improve the reliability of API calls without modifying existing code.

Why it matters

ReliAPI prevents financial losses caused by failed or repeated API calls, ensuring cost-effective and reliable interactions with LLM providers by automating error handling and usage monitoring.