Qwen3.5

The 397B native multimodal agent with 17B active params

#Open Source

#Artificial Intelligence

#Development

Qwen3.5 – Native multimodal agent combining large-scale reasoning with efficient inference

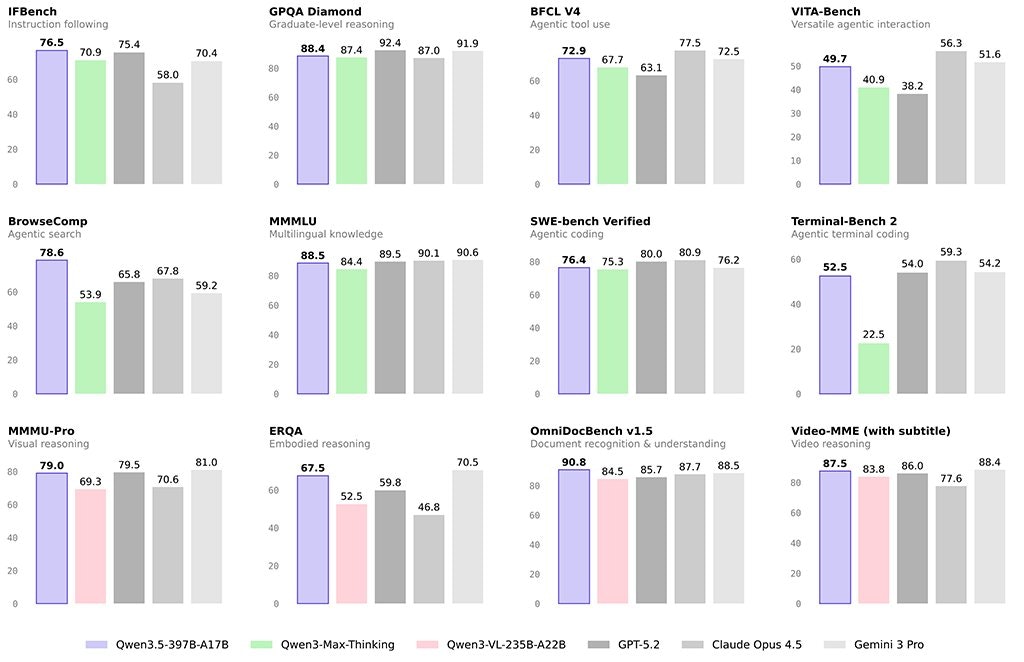

Summary: Qwen3.5 is an open-weight vision-language model with 397 billion parameters, using a hybrid architecture that activates only 17 billion per inference. It delivers deep reasoning and fast inference for long-horizon agentic tasks without external vision adapters.

What it does

It processes multimodal inputs natively using linear attention and Mixture of Experts, enabling efficient handling of complex workflows with reduced token usage.

Who it's for

Designed for developers and researchers building agentic applications requiring scalable vision-language reasoning with low latency.

Why it matters

It balances large-model reasoning depth with inference speed, optimizing performance for long-horizon tasks in multimodal agents.