OpenMark

Benchmark AI models for YOUR use case

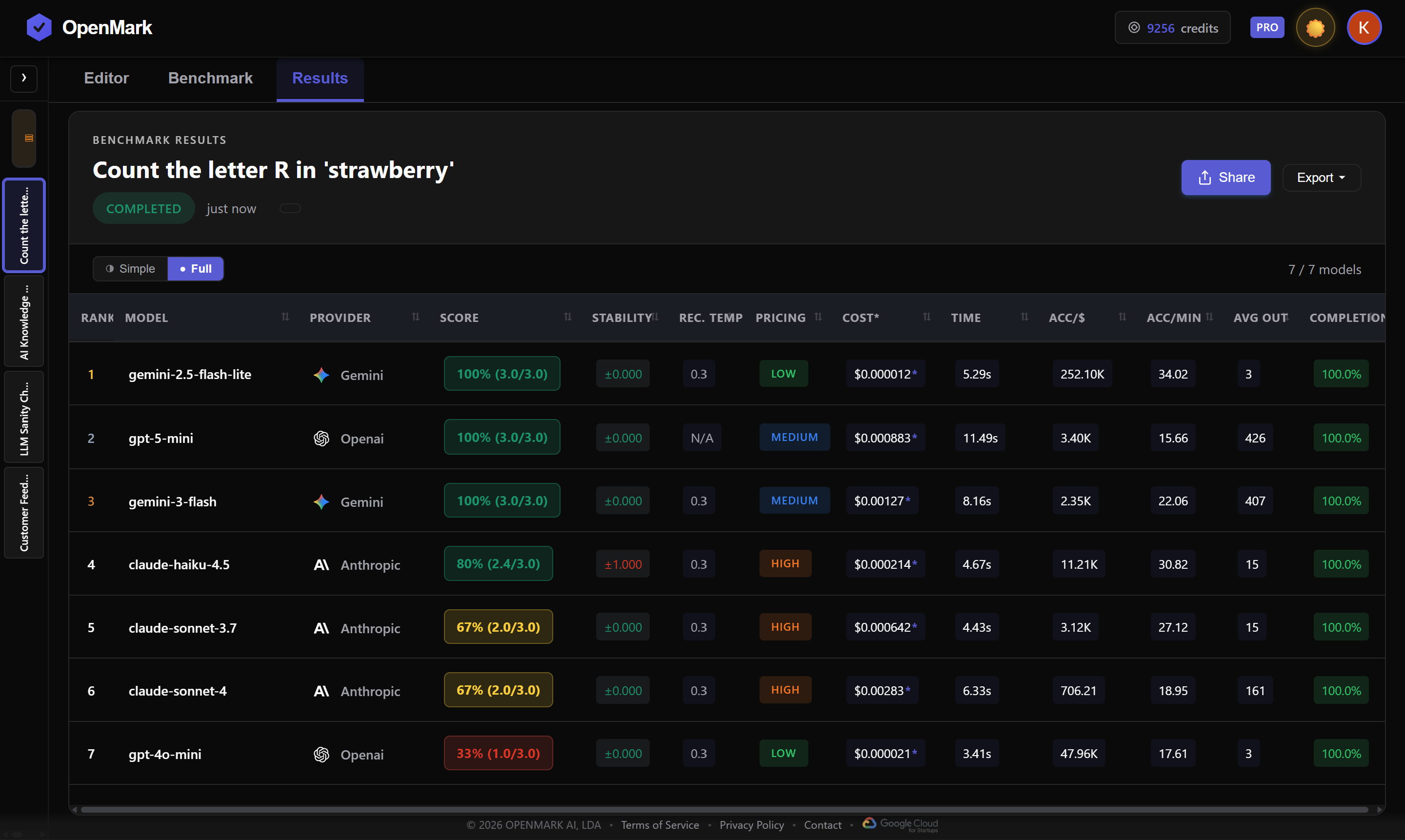

OpenMark – Benchmark AI models with deterministic, cost-aware metrics

Summary: OpenMark tests around 100 AI models using your specific prompts to provide deterministic scores, real API cost calculations, and stability metrics. It avoids LLM-based judging or voting, delivering reproducible results tailored to your use case.

What it does

OpenMark benchmarks AI models by evaluating them on your exact tasks with 18 scoring modes, incorporating real API pricing for cost and efficiency analysis, and supporting vision and document inputs.

Who it's for

It is designed for developers and teams needing precise, use-case-specific AI model comparisons, especially when selecting models for pipelines like retrieval-augmented generation.

Why it matters

It solves the problem of unreliable generic benchmarks by providing reproducible, task-specific performance and cost metrics to optimize model selection.