Object store built for AI workloads

High performance, AI-native objectstore with S3 API included

Object store built for AI workloads – High performance, AI-native objectstore with S3 API included

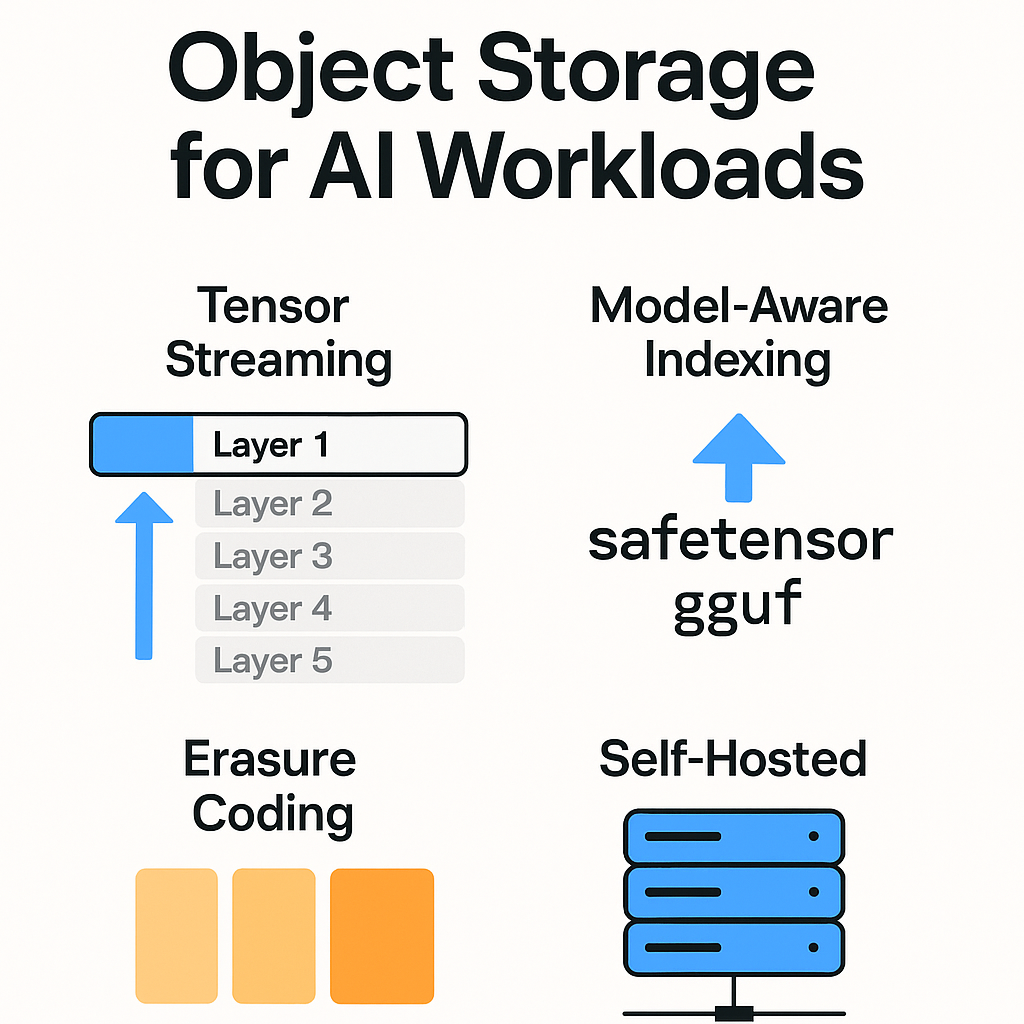

Summary: Anvil is an open-source, AI-native object store designed for large AI model files, combining S3 compatibility with gRPC-native support. It features model-aware indexing for formats like safetensors, gguf, and ONNX, tensor-level streaming, and erasure-coded storage to optimize handling of multi-GB models.

What it does

Anvil stores and serves large AI models efficiently by understanding model formats natively and enabling streaming at the tensor level. It supports S3 API and gRPC for integration and uses erasure coding for reliable storage.

Who it's for

It targets users managing large AI models, including those storing, versioning, and running local inference on multi-GB files.

Why it matters

Anvil addresses limitations of existing tools by reducing storage overhead, speeding up inference, and providing native support for AI model formats.