ModelRiver

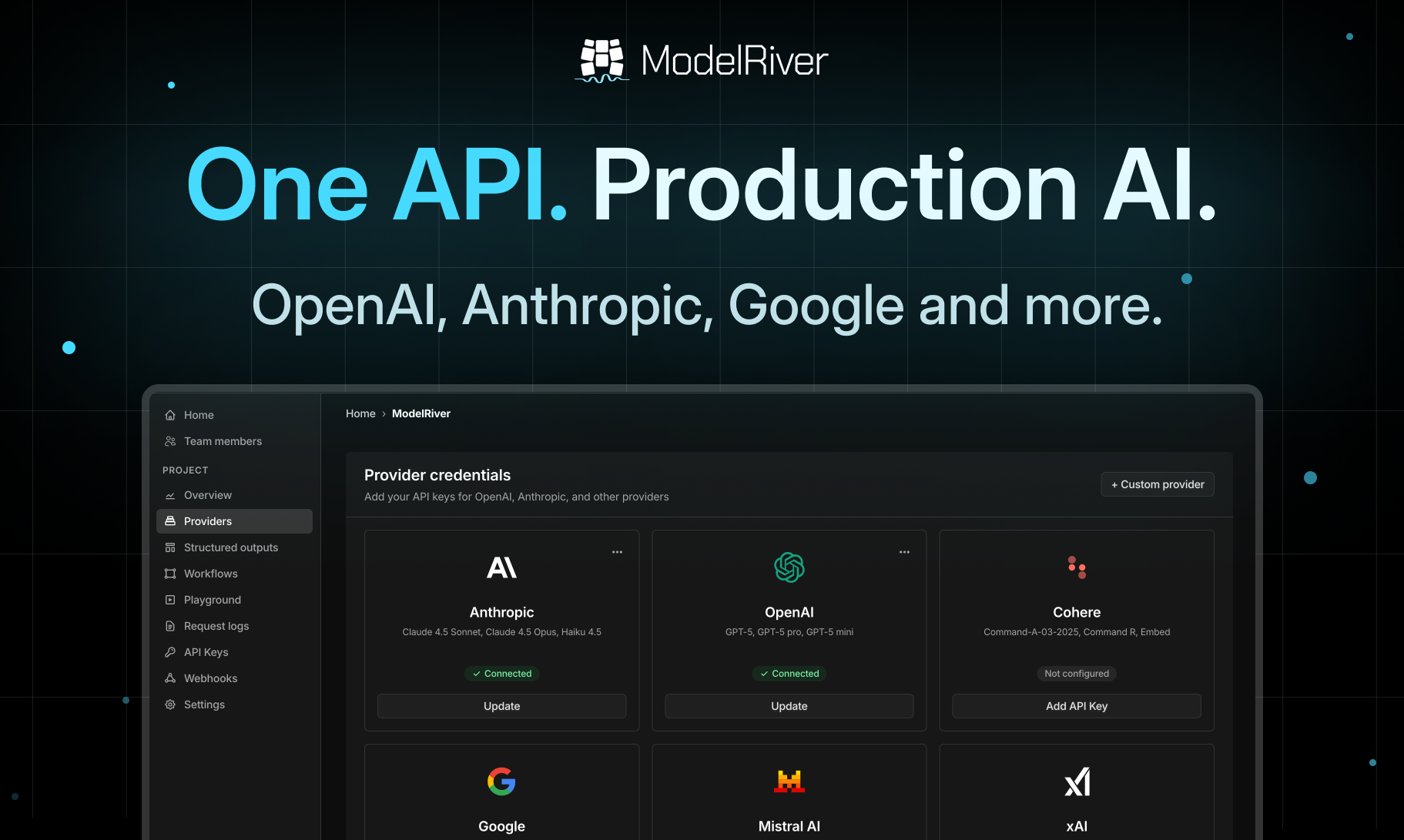

One AI API for production - streaming, failover, logs

ModelRiver – Unified AI API gateway with streaming, failover, and analytics

Summary: ModelRiver provides a single OpenAI-compatible API that integrates multiple LLM providers, offering consistent streaming, automatic failover, unified logging, and rate limiting. It simplifies AI infrastructure by standardizing interactions across providers and improving reliability and observability.

What it does

ModelRiver routes requests to various AI providers through one API, supporting custom configurations, streaming responses, failover, retries, and consolidated analytics. It enables bringing your own API keys while maintaining consistent behavior across models.

Who it's for

It is designed for developers and teams building AI-powered products who need a reliable, vendor-agnostic API to manage multiple LLM providers efficiently.

Why it matters

ModelRiver addresses the challenges of inconsistent SDKs, streaming differences, provider outages, and fragmented logs by unifying AI infrastructure to reduce maintenance overhead and improve system stability.