ModelRed

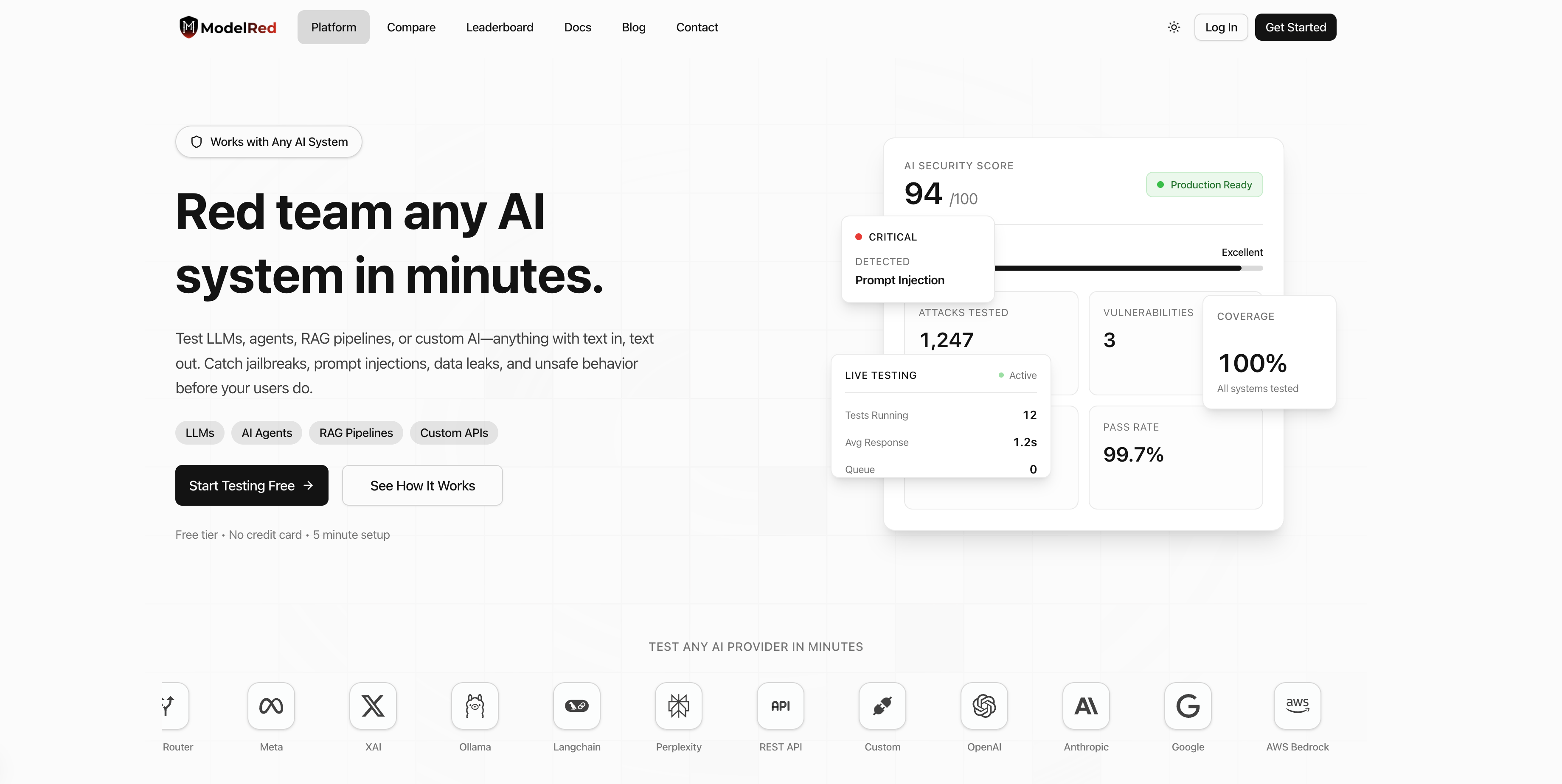

Red-team any AI system in minutes

#Developer Tools

#Artificial Intelligence

#Security

ModelRed tests AI applications for security vulnerabilities by running thousands of attack probes to identify prompt injections, data leaks, and jailbreaks. It provides a 0-10 security score, blocks CI/CD deployments below thresholds, and offers an open marketplace of attack vectors from security researchers. Compatible with OpenAI, Anthropic, AWS, Azure, Google, and custom endpoints, it includes a Python SDK for CI/CD automation. The open marketplace started with 200+ probes and is growing, while a public leaderboard shows security scores for models like Claude (9.5/10) and Mistral (3.3/10).