LocalCoder

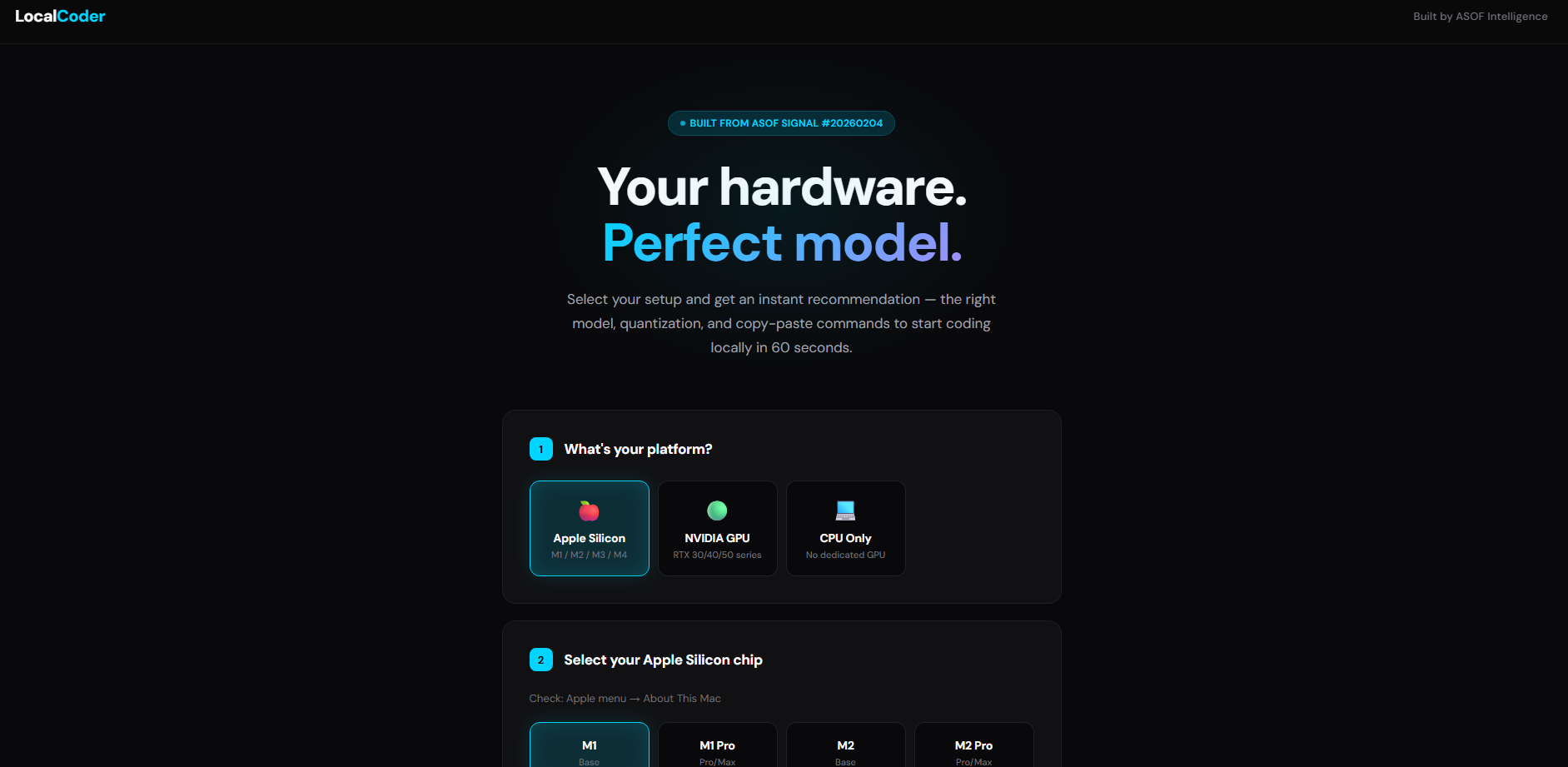

our hardware → the perfect local AI model in 60 seconds

LocalCoder – Matches your hardware to the optimal local AI coding model

Summary: LocalCoder identifies the best local AI coding model based on your hardware, including platform, chip, and memory. It provides model recommendations, quantization details, speed estimates, and commands to start coding locally without manual research.

What it does

Users select their platform (Apple Silicon, NVIDIA, or CPU) and hardware specs to receive tailored AI model options and setup commands derived from benchmark data. It uses real-world performance data from HN, Unsloth, and llama.cpp.

Who it's for

Developers seeking accurate local AI coding model configurations matched to their specific hardware.

Why it matters

It eliminates guesswork about VRAM, quantization, and model choice by providing data-driven recommendations for local AI coding setups.