Local LLM-Vision — Fully Offline iOS AI

Run LLM & Vision AI fully offline on your iPhone

Local LLM-Vision — Fully Offline iOS AI – Run LLM and vision models entirely on-device

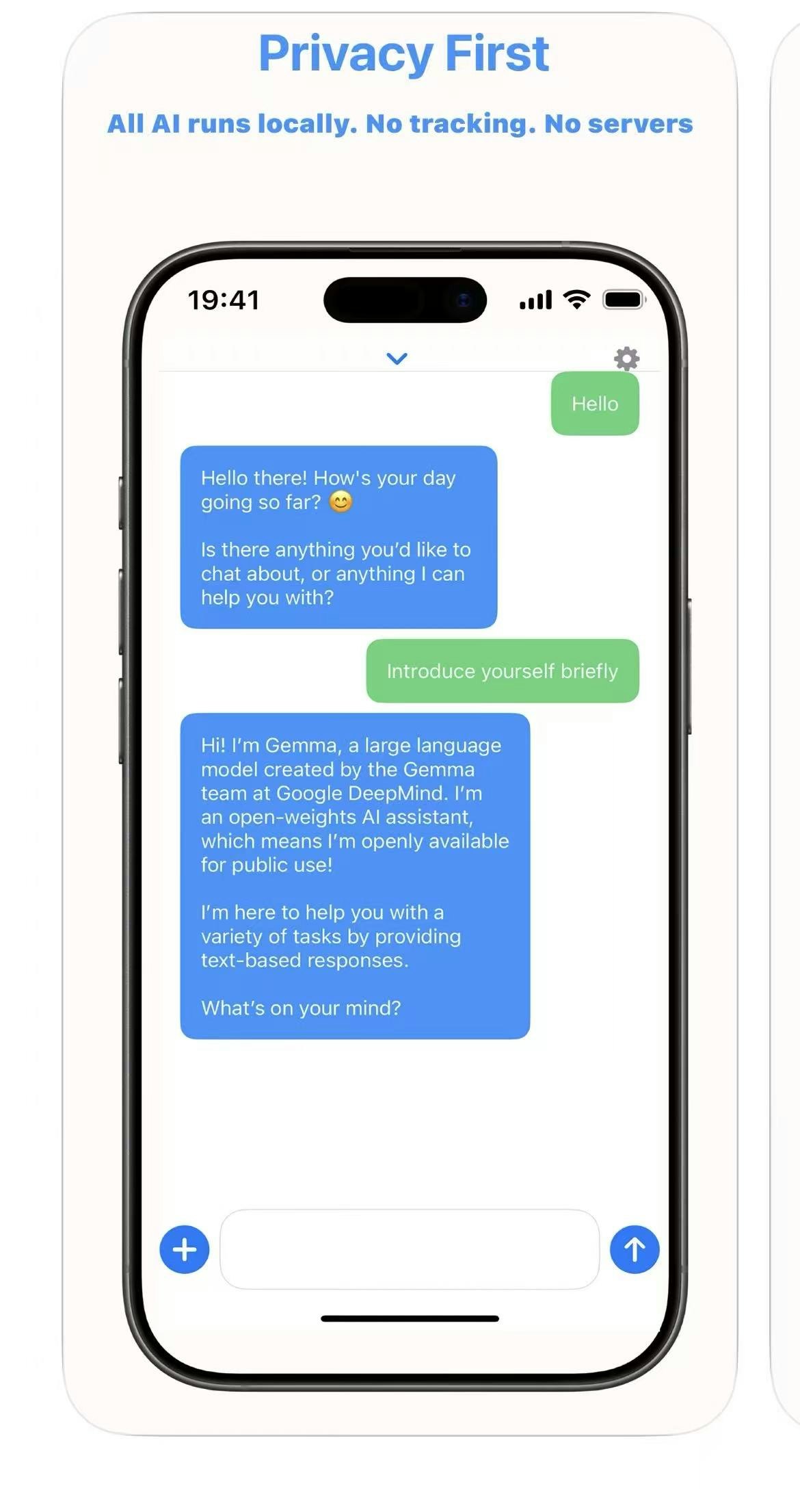

Summary: Local LLM-Vision is an iOS app that runs large language models (LLMs) and vision-language models (VLMs) fully offline on iPhones using Apple Metal acceleration. It processes text and images locally without internet or data upload, supporting multiple models optimized for speed and reasoning.

What it does

The app performs on-device inference for both LLMs and VLMs, enabling real-time chat and image analysis without cloud dependency. Users can switch between models based on performance and size requirements.

Who it's for

It targets users who need private, offline AI capabilities on modern iPhones, especially those focused on data privacy and edge inference.

Why it matters

It eliminates the need for internet or cloud services, ensuring data privacy and enabling AI functionality entirely on-device.