LLMSafe — Zero-Trust AI Security Gateway

A zero-trust security layer between your apps and LLMs

LLMSafe — Zero-Trust AI Security Gateway – A security layer between your apps and LLMs

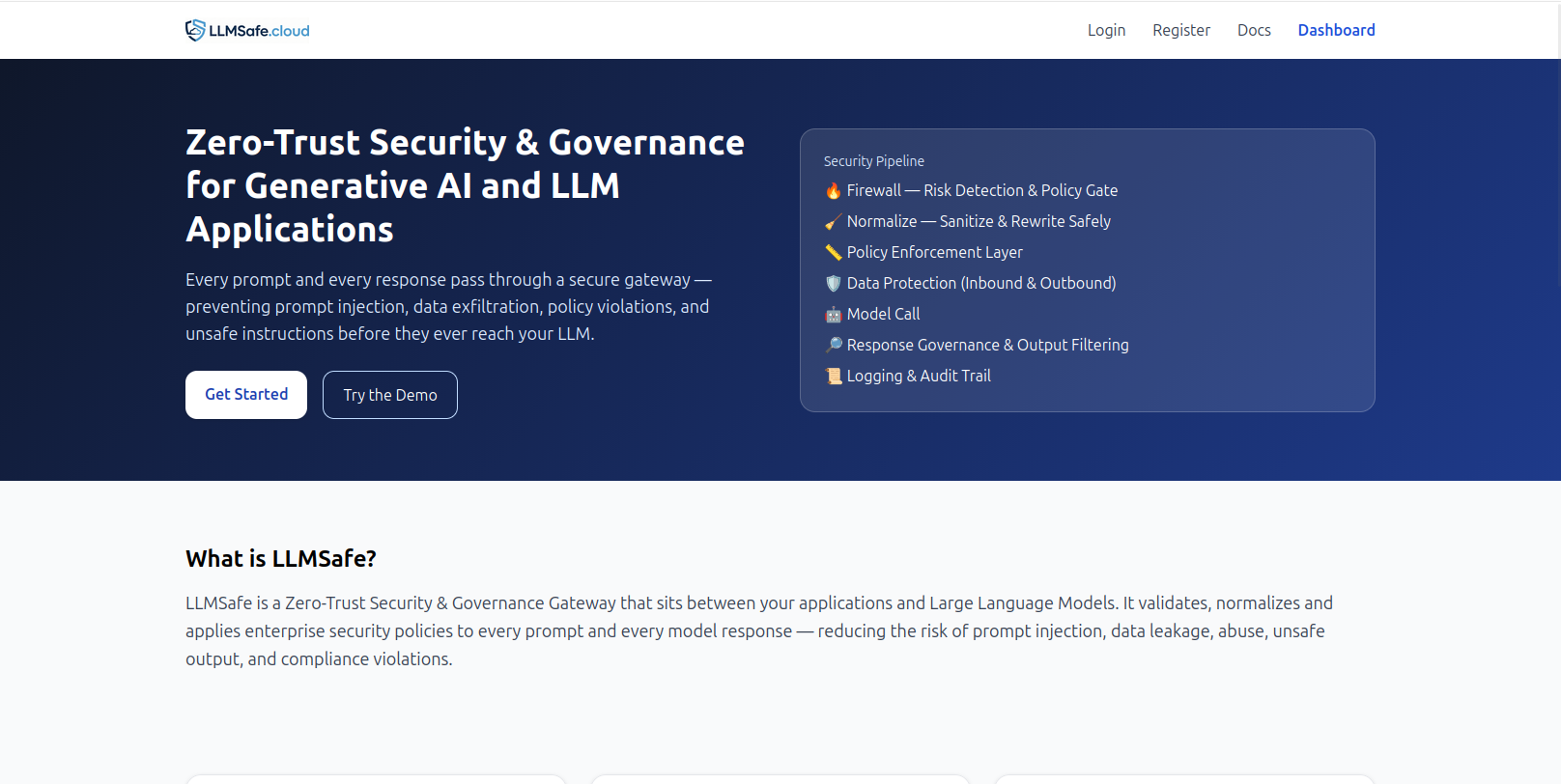

Summary: LLMSafe is a zero-trust security and governance gateway that intercepts all prompts and responses between applications and large language models. It enforces firewall checks, policy controls, data protection, and audit logging to reduce risks such as prompt injection, data leaks, unsafe outputs, and compliance issues.

What it does

LLMSafe applies firewall and risk detection, prompt normalization, policy enforcement, PII masking, output filtering, and full audit logging before and after every LLM call.

Who it's for

It is designed for teams and developers seeking secure, controlled, and auditable AI integration in production environments.

Why it matters

It addresses security gaps in AI pipelines by preventing data exfiltration, unsafe outputs, and compliance violations.