Kimi K2.5

Native multimodal model with self-directed agent swarms

Kimi K2.5 – Native multimodal model with self-directed agent swarms

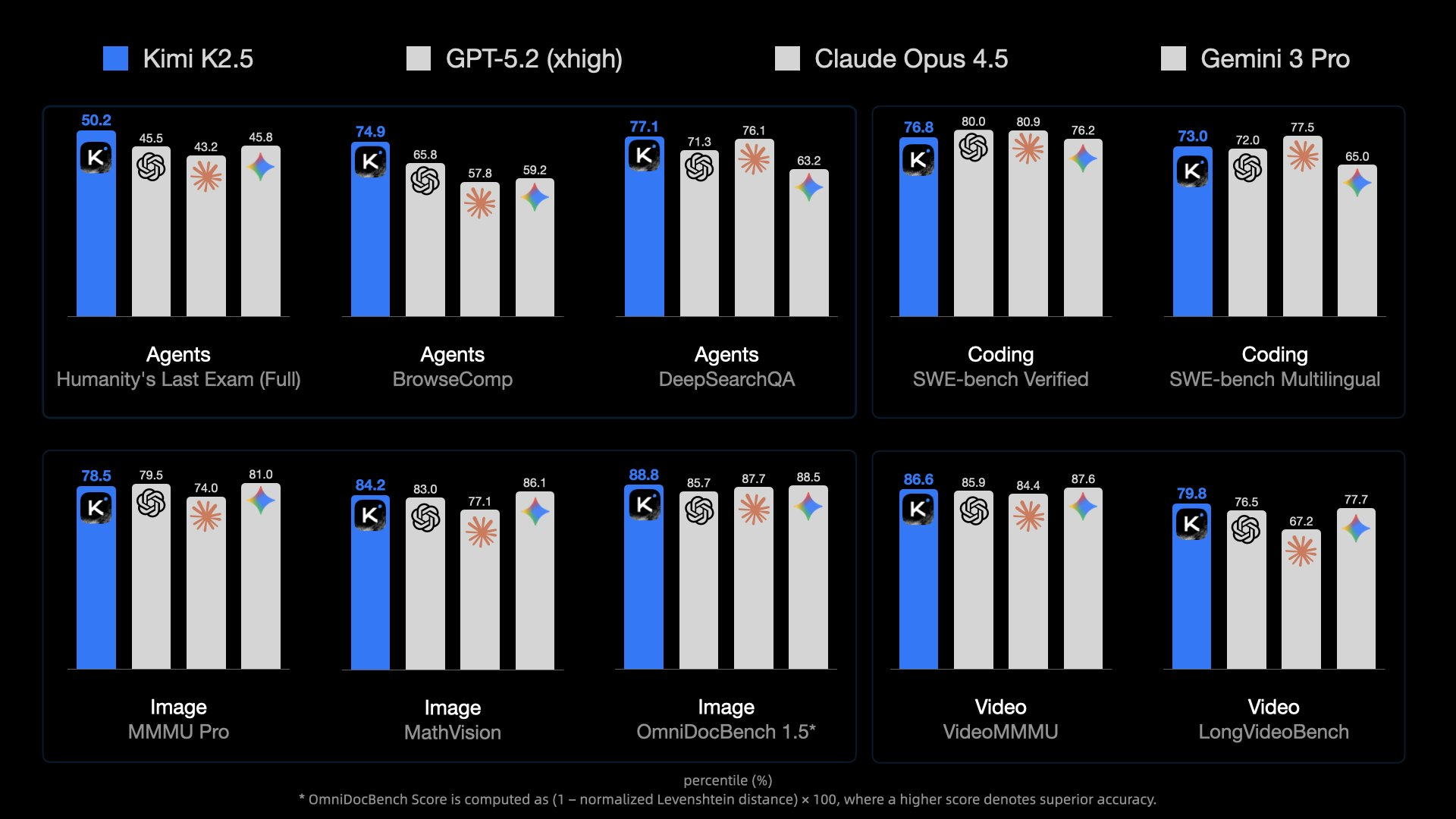

Summary: Kimi K2.5 is an open-source multimodal model supporting text and visual inputs, achieving state-of-the-art performance in agent tasks, code generation, and visual understanding. It introduces an Agent Swarm system that autonomously spawns up to 100 sub-agents to execute workflows in parallel, improving execution speed by up to 4.5 times.

What it does

Kimi K2.5 processes multimodal inputs in thinking and non-thinking modes and handles dialogue and agent workflows. Its Agent Swarm paradigm enables parallel task execution by spawning multiple sub-agents.

Who it's for

It targets developers and users needing advanced multimodal AI for code generation, visual analysis, and complex agent-based workflows.

Why it matters

It reduces execution time for complex tasks through scalable parallelism and supports practical applications like video-to-code generation.