ImageShielding for Art

Stop Generative AI from stealing your style.

#Art

#Artificial Intelligence

ImageShielding for Art – Prevent AI from copying your style

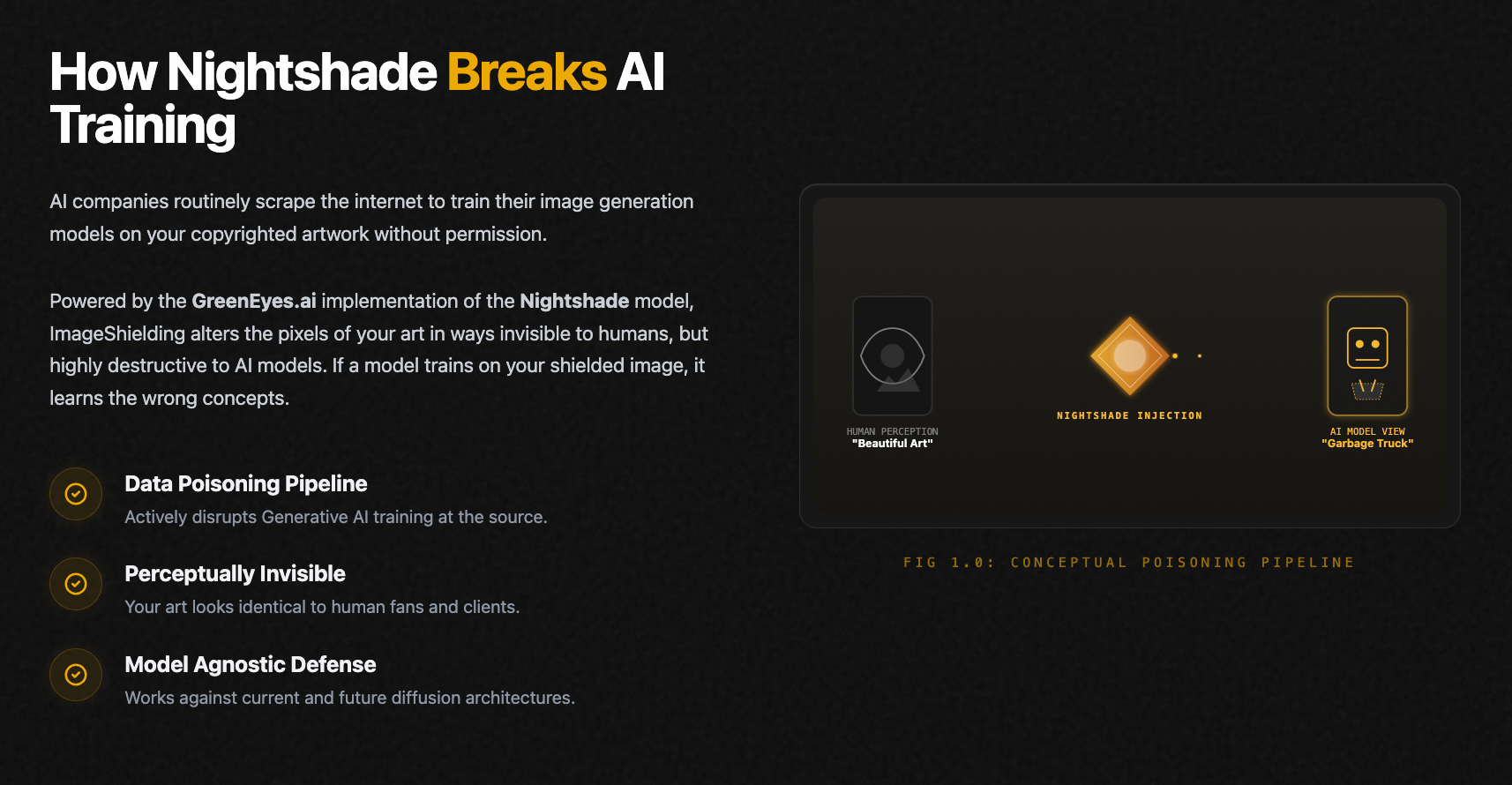

Summary: ImageShielding injects invisible Nightshade data poisons into artwork to corrupt AI training data. It prevents models like Midjourney from learning accurate representations of your images by altering pixels undetectably to humans but harmful to AI.

What it does

It modifies artwork pixels invisibly to humans, causing AI models trained on these images to learn incorrect concepts and degrade their output quality.

Who it's for

Artists and creators who want to protect their copyrighted work from unauthorized AI training.

Why it matters

It stops AI companies from scraping and using copyrighted art without permission, preserving artistic integrity.