Edge AI

Intelligent Data Compression

#Internet of Things

#Artificial Intelligence

#Tech

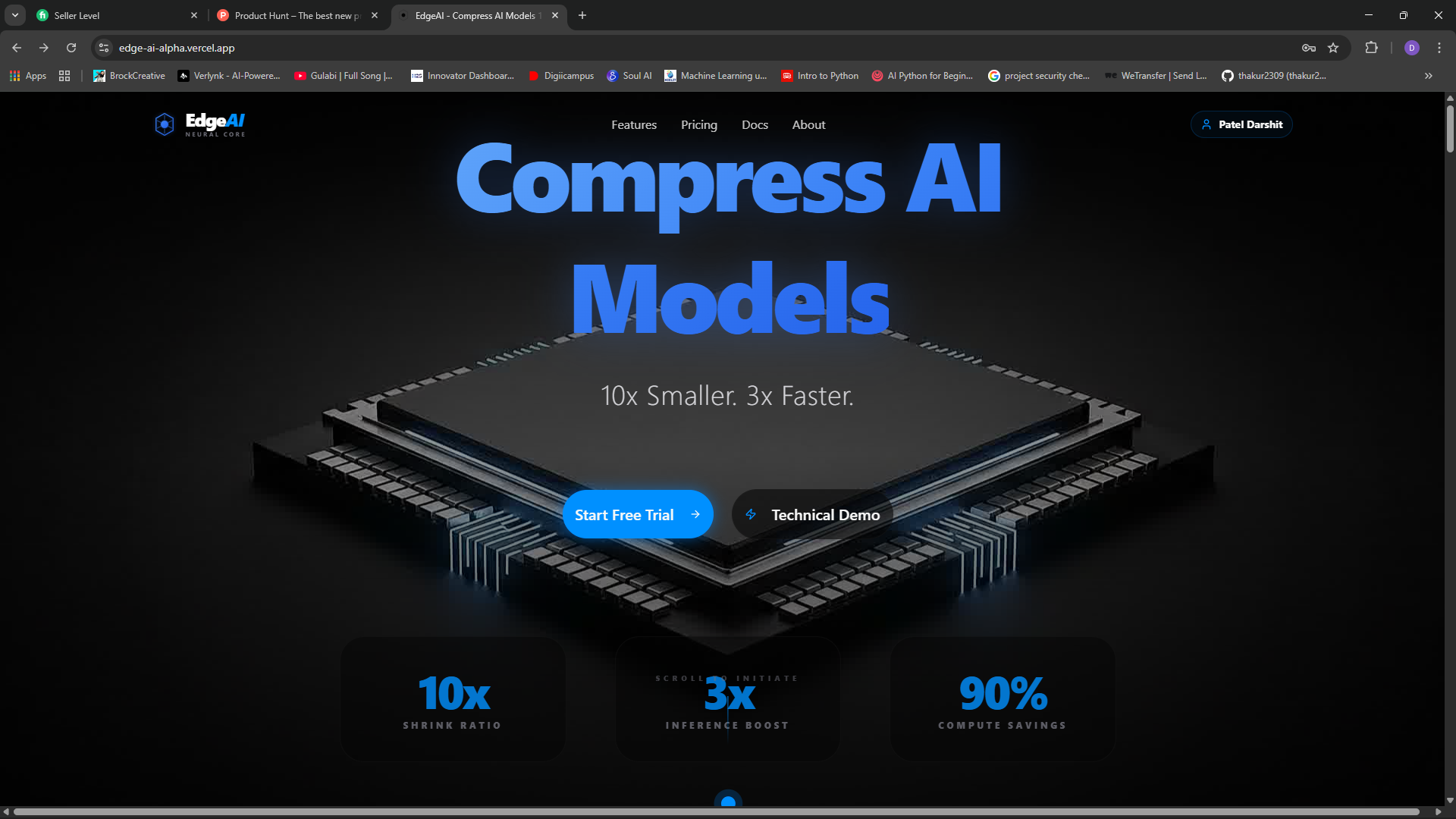

Edge AI – Intelligent Data Compression for AI Models

Summary: Edge AI compresses AI models up to 10x smaller using INT8/INT4 quantization, enabling deployment on edge devices, mobile, and IoT while reducing inference costs by 90%.

What it does

It applies INT8 and INT4 quantization to shrink AI models, facilitating efficient deployment on resource-constrained devices.

Who it's for

Developers deploying AI models on edge devices, mobile platforms, and IoT applications.

Why it matters

It reduces model size and inference costs, making AI deployment more efficient and affordable on limited hardware.