Cleq

Score how well your team guards AI-generated code

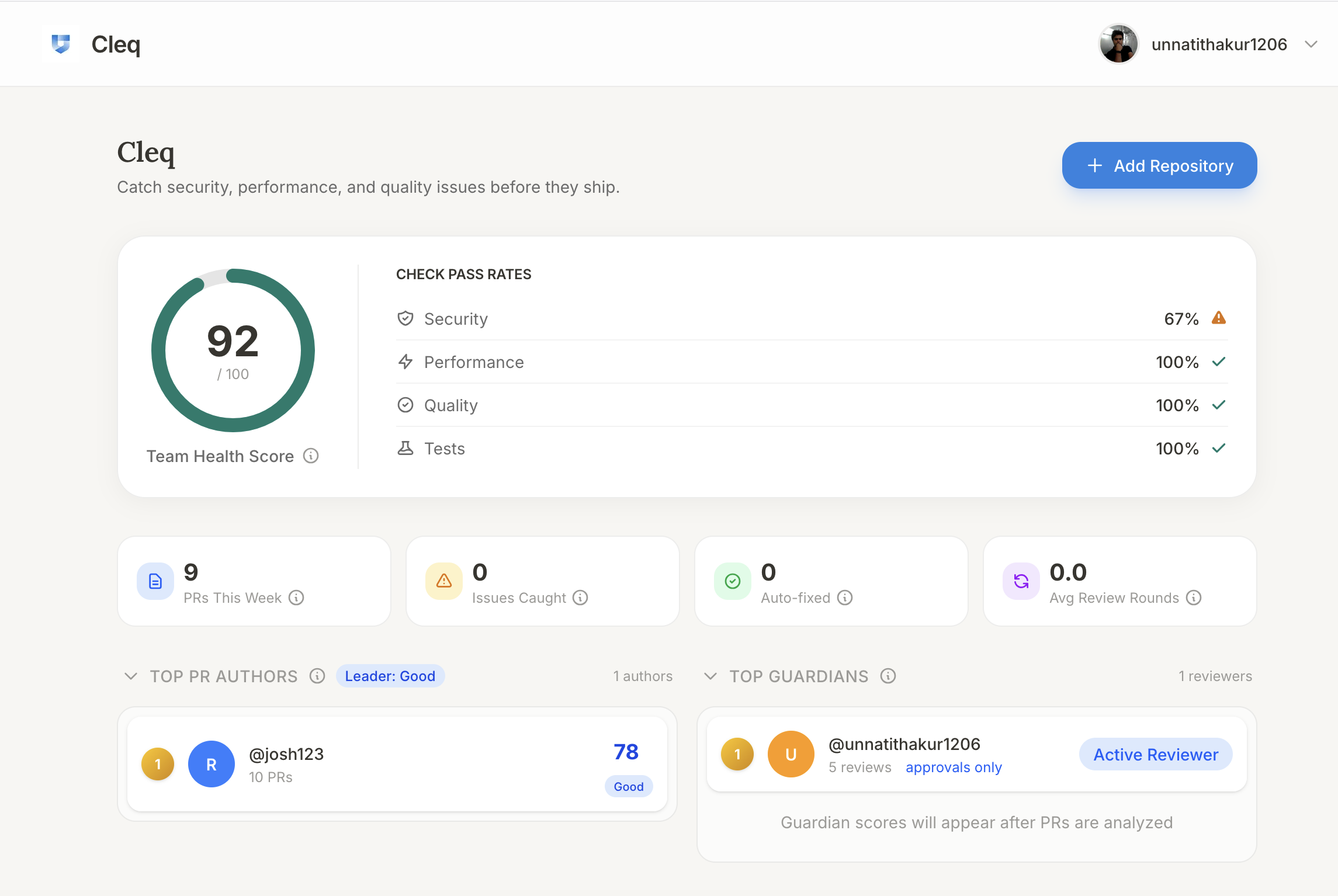

Cleq – Scores team effectiveness in reviewing AI-generated code

Summary: Cleq is a GitHub App that evaluates AI-generated code and reviewer performance by scoring pull requests on security, performance, and quality. It tracks individual and team metrics to identify how well reviewers catch AI mistakes and improve code quality.

What it does

Cleq scores each pull request with a PR Score (0-100) for code quality and a Guardian Score (0-100) for reviewer effectiveness, alongside a Team Health metric to assess AI’s overall impact. It analyzes the entire review process, including whether feedback is addressed.

Who it's for

It is designed for engineering managers and development teams using AI code suggestions who want to measure code review quality and team performance.

Why it matters

Cleq addresses the issue of developers blindly accepting AI-generated code by quantifying reviewer impact and ensuring code quality before production.