AgentAudit

The "Lie Detector" API for RAG & AI Agents

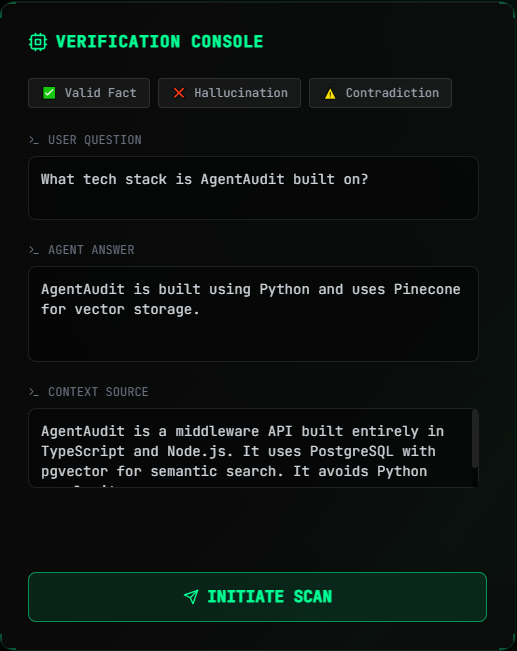

AgentAudit – Middleware API to verify LLM responses against source context

Summary: AgentAudit is a middleware API that intercepts LLM-generated answers and verifies their accuracy against retrieved context in real-time, preventing AI hallucinations before responses reach users. It uses semantic comparison to flag unsupported claims, ensuring reliable outputs for retrieval-augmented generation (RAG) agents.

What it does

AgentAudit receives the retrieved context and generated answer, then applies strict logic checks to confirm every claim is grounded in the source text. It flags hallucinations or citation errors immediately, acting as a semantic firewall between the agent and frontend.

Who it's for

It is designed for developers building RAG and AI agent pipelines who need a deterministic method to verify LLM outputs across frameworks like LangChain, Flowise, or custom backends.

Why it matters

AgentAudit addresses silent failures where LLMs confidently produce incorrect details despite accurate retrieval, improving trust and safety by catching errors before users see them.